Meet Shengdong (Shen) Zhao

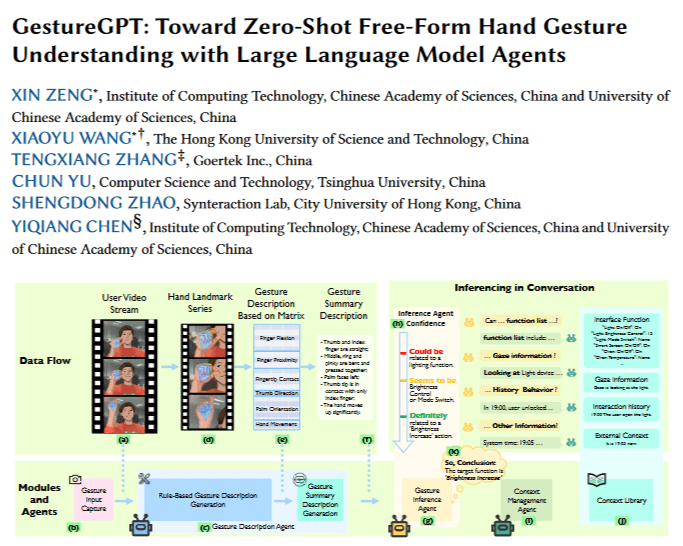

I am a full professor in the School of Creative Media and Computer Science Department at the City University of Hong Kong (CityU). Previously, I held the position of Associate Professor in the Computer Science Department of the National University of Singapore (NUS). I obtained my PhD in computer science from the University of Toronto and my master’s degree from the School of Information Management & Systems at the University of California, Berkeley. My undergraduate education included a dual major in computer science and biology from Linfield College, Oregon, USA. In January 2009, I founded the Synteraction (formerly NUS-HCI) Lab. My research focuses on developing innovative interface tools and applications that simplify and enhance people’s lives, such as Draco, which won the Best iPad App of the Year in 2016. My publications regularly occur in top HCI conference proceedings and journals, including ToCHI, CHI, UbiComp, CSCW, UIST, and IUI. I am also passionate about bridging academic research with industry and have served as a senior consultant with the Huawei Consumer Business Group. Additionally, I actively participate in program committees for leading HCI conferences and have served as the paper co-chair for the ACM SIGCHI conferences in 2019 and 2020. During my leisure time, I enjoy reading, running, and exploring the beauty of nature.